Aldebaran is under “liquidation judiciaire“, an auction to sell the assets will take place the 10th of July:

You can follow the auction online here.

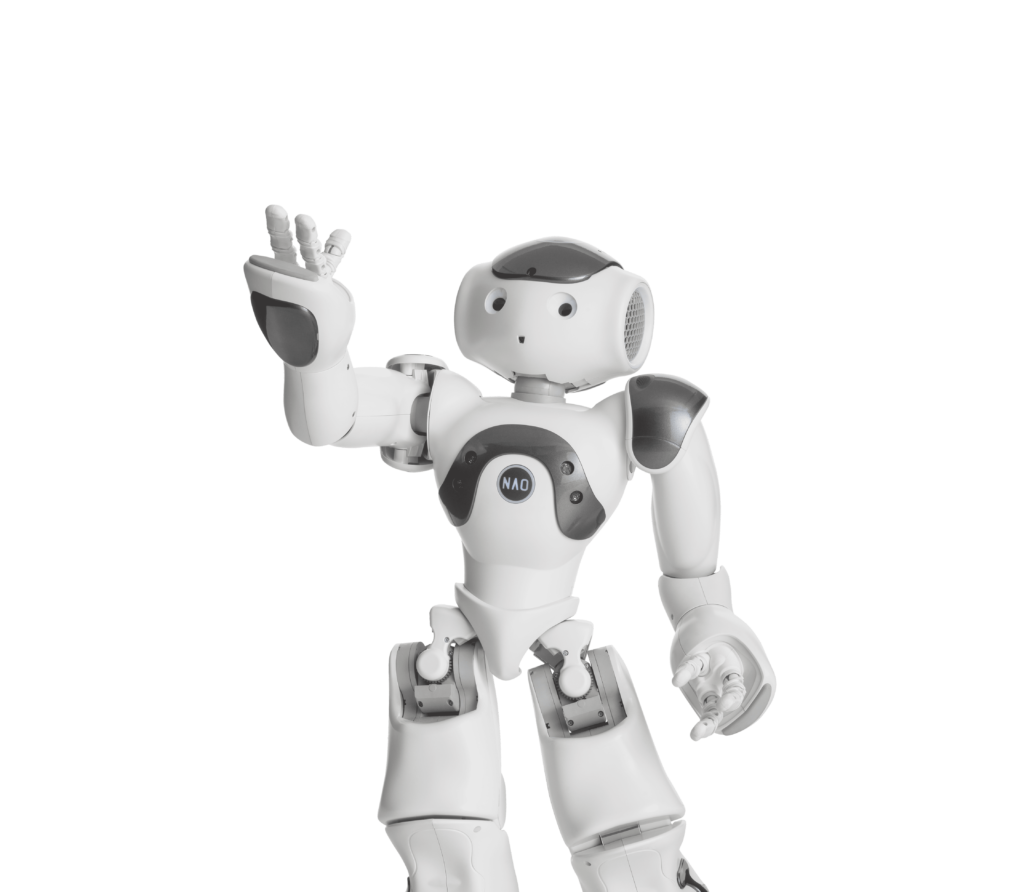

The famous humanoid robot for immersive experiences in Education and Healthcare

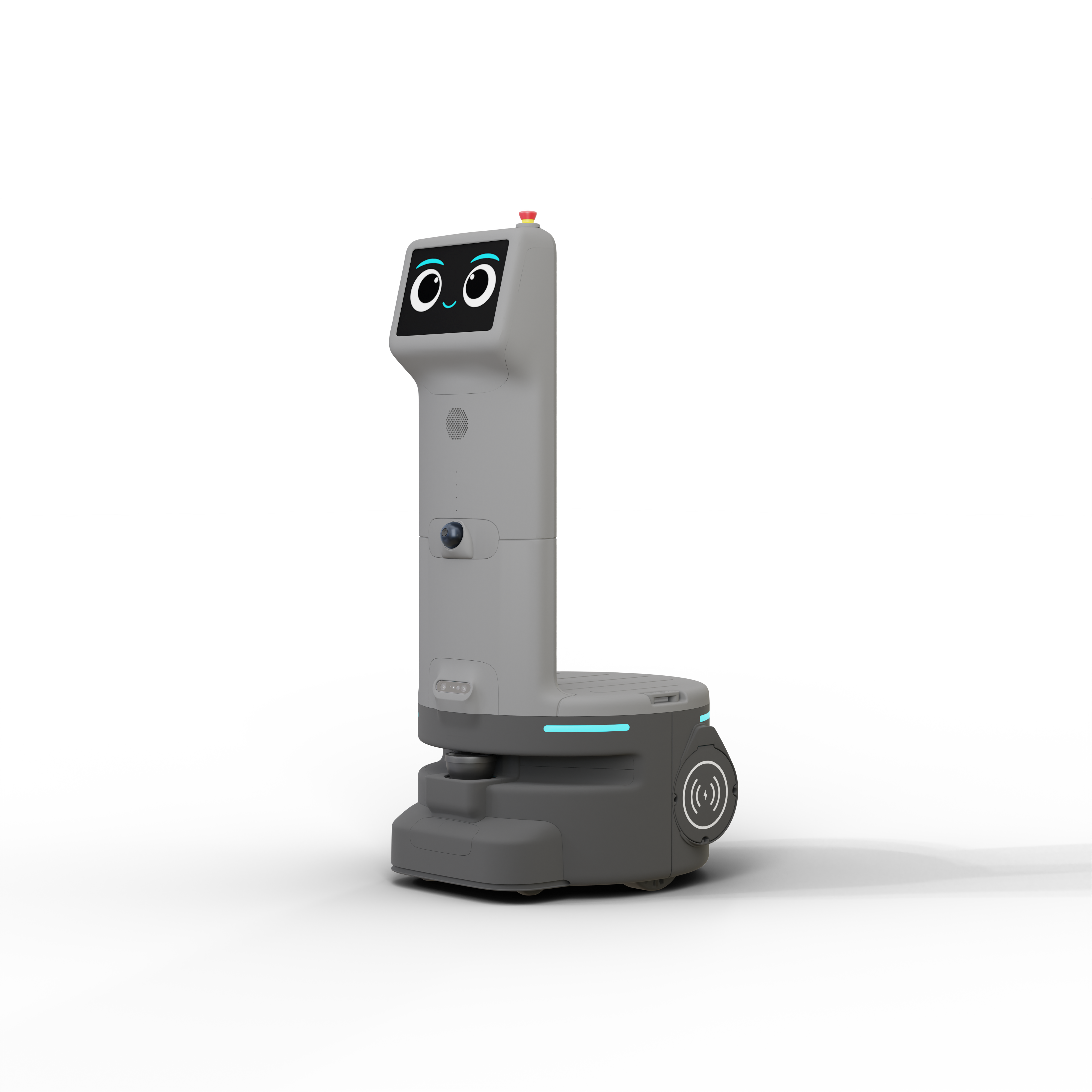

Need a helping hand? Let Plato take the load off your shoulders!

The programable humanoid robot and its tablet for visual interactions.

Discover NAO7 to be released in 2026 with advanced AI capacities to be the best companion in Education and Healthcare.

A versatile and customizable service robot for all assistance needs.

Immersive experiences from Teaching to Programing.

Staff & Patient assistance, Cognitive activities for seniors....

Alleviate staff pain in daily operations

“We must consider today, in the same way that water is more important than gold, that humans are more important than machines”

Product Security and Safety are from years the main concerns of Aldebaran, and a real differentiator. We are committed to deliver robots operating without hurting end-users and not representing a possible point of weakness for information systems.

Data Privacy

Our robots and services are secured by design and are compliant with personal data legislation (GDPR). In particular, Aldebaran strives to minimize the amount of data processed.

Safety certifications

Our robots, such as NAO6 and Plato, are certified under the Radio Equipment Directive 2014/53/EU, including IEC 62368-1 and Class B for EMC (Europe only), Plato is specifically certified under the Machinery Directive 2006/42/EC with ISO 13482 and safety functions under ISO 13849-1, allowing them to operate safely in commercial and healthcare environments.

Aldebaran is the global leader in humanoid robotics, recognized since 2005 for its innovative human-robot empathetic interactions.

With NAO, Pepper, and Plato, already deployed over 70 countries, Aldebaran is transforming industries with concrete solutions for healthcare, education, retail, and tourism.

To provide the best experiences, we use technologies like cookies to store and/or access device information. Consenting to these technologies will allow us to process data such as browsing behavior or unique IDs on this site. Not consenting or withdrawing consent, may adversely affect certain features and functions. Cookies will be stored for 90 days only.